After many delays, No Time to Die hit UK cinemas a month ago, and has already made half a billion dollars around the world. The 25th James Bond movie was shot on celluloid, making it part of a small group of productions that choose to keep using the traditional medium despite digital becoming the dominant acquisition format in 2013.

Skyfall made headlines the year before that when it became the first Bond film to shoot digitally, captured on the Arri Alexa by the legendary Roger Deakins. But when director Sam Mendes returned to helm the next instalment, 2015’s Spectre, he opted to shoot on 35mm.

“With the Alexa, I missed the routine of film and the dailies,” Mendes told American Cinematographer. “Watching dailies on the big screen for the first time is kind of like Christmas. Film is difficult, it’s imprecise, but that’s also the glory of it. It had romance, a slight nostalgia… and that’s not inappropriate when dealing with a classic Bond movie.”

Early rumours suggested that Bond’s next outing, No Time to Die, would return to digital capture, but film won out again. Variety reported last year: “[Director Cary Joji] Fukunaga and cinematographer Linus Sandgren pushed to have No Time to Die shot on film instead of digital, believing it enhanced the look of the picture.”

Charlotte Bruus Christensen believed the same when she photographed the horror film A Quiet Place on 35mm. “Film captures the natural warmth, colour and beauty of the daylight,” she told British Cinematographer, “but it’s also wonderful in the dark, the way it renders the light on a face from a candle, before falling off into deep detailed blacks. It is quite simply beautiful and uniquely atmospheric.”

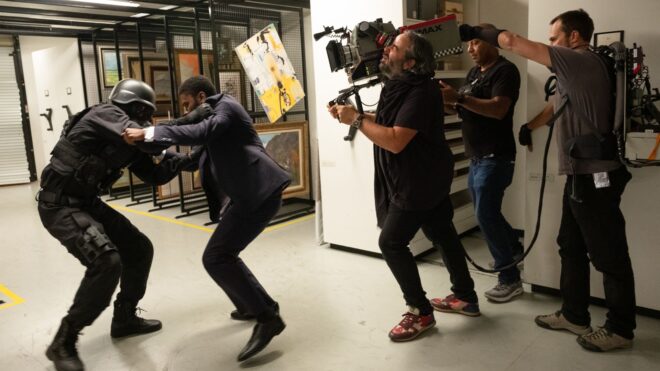

The Bond filmmakers went one step further, making No Time to Die the franchise’s first instalment to utilise IMAX 65mm (reportedly for action sequences only). In this respect they follow in the footsteps of film’s most passionate advocate, Christopher Nolan, who mixed 35mm and IMAX for The Dark Knight, The Dark Knight Rises and Interstellar.

“I think IMAX is the best film format that was ever invented,” said the celebrated director in a DGA interview. “It’s the gold standard and what any other technology has to match up to, but none have, in my opinion.” For his most recent films, Dunkirk and Tenet, Nolan eschewed 35mm altogether, mixing IMAX with standard 65mm.

Nolan’s brother Jonathan brought the same passion for celluloid to his TV series Westworld. “Jonathan told me that he had already made up his mind about film,” said pilot DP Paul Cameron in an Indiewire interview. “We wanted the western town to feel classy and elegant… There’s something tactile and formidable [about film] that’s very real.”

Cameron and another of the show’s DPs, John Grillo, both felt that film worked perfectly for the timeless, minimalist look of the desert, but when the story moved to a futuristic city for its third season Grillo expected a corresponding switch in formats. “I thought we might go digital, shooting in 4K or 6K. But it never got past my own head. Jonathan would never go for it. So we stuck with film. We’re still telling the same story, we’re just in a different place.”

One series that did recently transition from film to digital is The Walking Dead. For nine and a half seasons the zombie thriller was shot on Super 16, a decision first made for the 2010 pilot after also testing a Red, a Panavision Genesis and 35mm. “When the images came back, everyone realised that Super 16 was the format that made everything look right,” reported DP David Boyd. “With the smaller gauge and the grain, suddenly the images seemed to derive from the graphic novel itself. Every image is a step removed from reality and a step deeper into cinema.”

“You really are in there with the characters,” added producer Gale Anne Hurd during a Producers Guild of America panel. “The grain itself, it somehow makes it feel much more personal.” Creator Frank Darabont also noted that the lightweight cameras can be squeezed guerilla-style into small spaces for a more intimate feel.

The team were forced to switch to digital capture during season ten when the COVID-19 pandemic struck. “The decision came about because there are fewer ‘touch points’ with digital than 16mm,” showrunner Angela Kang explained to the press. “We don’t have to swap out film every few minutes, for example.”

The pandemic also hit No Time to Die, pushing its release date back by 18 months and triggering the closure of the Cineworld chain, putting 45,000 jobs in jeopardy. But its reception so far has proved that there’s life yet in both celluloid capture and cinema as a whole.