Today I’m investigating the so-called normal (a.k.a. standard) lens, finding out exactly what it is, the history behind it, and how it’s relevant to contemporary cinematographers.

The Normal lens in still photography

A normal lens is one whose focal length is equal to the measurement across the diagonal of the recorded image. This gives an angle of view of about 53°, which is roughly equivalent to that of the human eye, at least the angle within which the eye can see detail. If a photo taken with a normal lens is printed and held up in front of the real scene, with the distance from the observer to the print being equal to the diagonal of the print, then objects in the photo will look exactly the same size as the real objects.

Lenses with a shorter focal length than the normal are known as wide-angle. Lenses with a greater focal length than the normal are considered to be long lenses. (Sometimes you will hear the term telephoto used interchangeably with long lens, but a telephoto lens is technically one which has a focal length greater than its physical length.)

A still 35mm negative is 43.3mm across the diagonal, but this got rounded up quite a bit — by Leica inventor Oskar Barnack — so that 50mm is widely considered to be the normal lens in the photography world. Indeed, some photographers rarely stray from the 50mm. For some this is simply because of its convenience; it is the easiest length of lens to manufacture, and therefore the cheapest and lightest. Because it’s neither too short nor too long, all types of compositions can be achieved with it. Other photographers are more dogmatic, considering a normal lens the only authentic way to capture an image, believing that any other length falsifies or distorts perspective.

The normal lens in cinematography

SMPTE (the Society of Motion Picture and Television Engineers), or indeed SMPE as it was back then, decided almost a century ago that a normal lens for motion pictures should be one with a focal length equal to twice the image diagonal. They reasoned that this would give a natural field of view to a cinema-goer sitting in the middle of the auditorium, halfway between screen and projector (the latter conventionally fitted with a lens twice the length of the camera’s normal lens).

A Super-35 digital cinema sensor – in common with 35mm motion picture film – has a diagonal of about 28mm. According to SMPE, this gives us a normal focal length of 56mm. Acclaimed twentieth century directors like Hitchcock, Robert Bresson and Yasujiro Ozu were proponents of roughly this focal length, 50mm to be more precise, believing it to have the most natural field of view.

Of course, the 1920s SMPE committee, living in a world where films were only screened in cinemas, could never have predicted the myriad devices on which movies are watched today. Right now I’m viewing my computer monitor from a distance about equal to the diagonal of the screen, but to hold my phone at the distance of its diagonal would make it uncomfortably close to my face. Large movie screens are still closer to most of the audience than their diagonal measurement, just as they were in the twenties, but smaller multiplex screens may be further away than their diagonals, and TV screens vary wildly in size and viewing distance.

The new normal

To land in the middle of the various viewing distances common today, I would argue that filmmakers should revert to the photography standard of a normal focal length equal to the diagonal, so 28mm for a Super-35 sensor.

According to Noam Kroll, “Spielberg, Scorsese, Orson Wells, Malick, and many other A-list directors have cited the 28mm lens as one of their most frequently used and in some cases a favorite [sic]”.

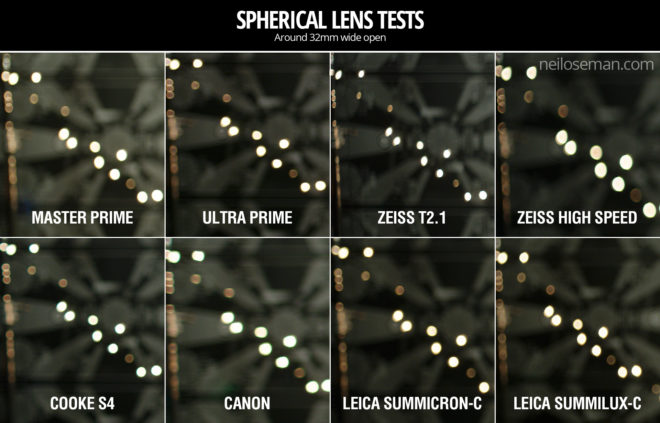

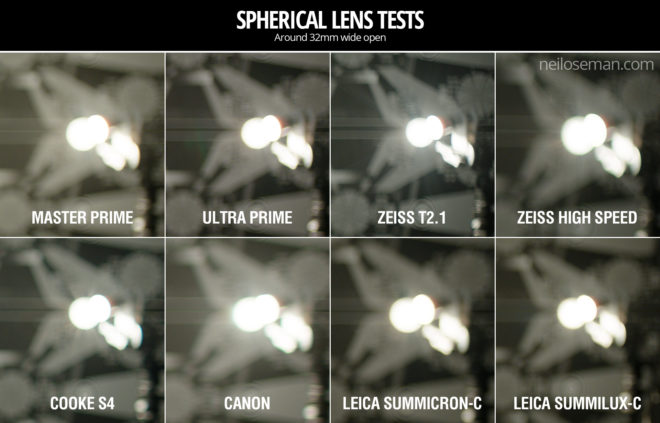

I have certainly found lenses around that length to be the most useful on set. A 32mm is often my first choice for handheld, Steadicam, or anything approaching a POV. It’s great for wides because it compresses things a little and crops out unnecessary information while still taking plenty of the scene in. It’s also good for mids and medium close-ups, making the viewer feel involved in the conversation.

When I had to commit to a single prime lens to seal up in a splash housing for a critical ocean scene in The Little Mermaid, I quickly chose a 32mm, knowing that I could get wides and tights just by repositioning myself.

I’ve found a 32mm useful in situations where coverage was limited. Many scenes in Above the Clouds were captured as a simple shot-reverse: both mids, both on the 32mm. This was done partly to save time, partly because most of the sets were cramped, and partly because it was a very effective way to get close to the characters without losing the body language, which was essential for the comedy. We basically combined the virtues of wides and close-ups into a single shot size!

In addition to the normal lens’ own virtues, I believe that it serves as a useful marker post between wide lenses and long lenses. In the same way that an editor should have a reason to cut, in a perfect world a cinematographer should have a reason to deviate from the normal lens. Choose a lens shorter than the normal and you are deliberately choosing to expand the space, to make things grander, to enhance perspective and push planes apart. Select a lens longer than the normal and you’re opting for portraiture, compression, stylisation, maybe even claustrophobia. Thinking about all this consciously and consistently throughout a production can add immeasurably to the impact of the story.

You can use data supplied by the lamp manufacturer to calculate the exposure it will provide, which is very useful in preproduction when deciding what size of lamps you need to hire. There are apps for this, such as the

You can use data supplied by the lamp manufacturer to calculate the exposure it will provide, which is very useful in preproduction when deciding what size of lamps you need to hire. There are apps for this, such as the  Some believe that light meters are unnecessary in today’s digital landscape, but I disagree. Most of the methods listed below require the camera, but the camera may not always be handy – on a location recce, for example. Or during production, it would be inconvenient to interrupt the ACs while they’re rigging the camera onto a crane or Steadicam. This is when having a light meter on your belt becomes very useful.

Some believe that light meters are unnecessary in today’s digital landscape, but I disagree. Most of the methods listed below require the camera, but the camera may not always be handy – on a location recce, for example. Or during production, it would be inconvenient to interrupt the ACs while they’re rigging the camera onto a crane or Steadicam. This is when having a light meter on your belt becomes very useful. Now we move along the light path and consider light after it has been reflected off the subject. This is what a spot meter measures. It has a viewfinder with which you target the area you want to read, and it is capable of metering things that would be impractical or impossible to measure with an incident meter. If you had a bright hillside in the background of your shot, you would need to drive over to that hill and climb it to measure the incident light; with a spot meter you would simply stand at the camera position and point it in the right direction. A spot meter can also be used to measure light sources themselves: the sky, a practical lamp, a flame and so on.

Now we move along the light path and consider light after it has been reflected off the subject. This is what a spot meter measures. It has a viewfinder with which you target the area you want to read, and it is capable of metering things that would be impractical or impossible to measure with an incident meter. If you had a bright hillside in the background of your shot, you would need to drive over to that hill and climb it to measure the incident light; with a spot meter you would simply stand at the camera position and point it in the right direction. A spot meter can also be used to measure light sources themselves: the sky, a practical lamp, a flame and so on. Your smartphone can be turned into a spot meter with a suitable app, such as

Your smartphone can be turned into a spot meter with a suitable app, such as

A waveform plots luminance on the vertical axis, with the horizontal axis matching the horizontal position of those luminance values within the frame. The density of the plotting reveals the prevalence of the values. A waveform that was dense in the bottom left, for example, would indicate a lot of dark tones on the lefthand side of frame. Since the vertical (luminance) axis represents IRE (Institute of Radio Engineers) values, waveforms are ideal when you need to expose to a given IRE, for example when calibrating a system by shooting a grey card. Another common example would be a visual effects supervisor requesting that a green screen be lit to 50 IRE.

A waveform plots luminance on the vertical axis, with the horizontal axis matching the horizontal position of those luminance values within the frame. The density of the plotting reveals the prevalence of the values. A waveform that was dense in the bottom left, for example, would indicate a lot of dark tones on the lefthand side of frame. Since the vertical (luminance) axis represents IRE (Institute of Radio Engineers) values, waveforms are ideal when you need to expose to a given IRE, for example when calibrating a system by shooting a grey card. Another common example would be a visual effects supervisor requesting that a green screen be lit to 50 IRE.