It is 1959. Just two years have passed since the launch of the USSR’s Sputnik 1 satellite blew the starting whistle for the Space Race. Sputnik 2, carrying poor Laika the dog, and the American satellite Explorer 1 swiftly followed. Crewed spaceflight is still a couple of years away, but already the eyes of the world’s superpowers have turned to Earth’s nearest neighbour: the moon.

Early attempts at sending probes to the moon were disastrous, with the first three of America’s Pioneer craft crashing back to Earth, while a trio of Soviet attempts exploded on launch. Finally the USSR’s Luna 1 – intended to crash-land on the surface – at least managed a fly-by. Luna 2 reached its target, becoming the first man-made object on the moon in September 1959.

The stage is now set for Luna 3. Its mission: to photograph the far side of the moon.

Our planet and its natural satellite are in a state known as tidal locking, meaning that the moon takes the same length of time to circle the earth as it does to rotate around its own axis. The result is that the same side of the moon always faces us here on Earth. Throughout all of human history, the far side has been hidden to us.

But how do you take a photograph a quarter of a million miles away and return that image to Earth with 1950s technology?

At this point in time, television has been around for twenty years or so. But the images are transient, each frame dancing across the tube of a TV camera at, say, Alexandra Palace, oscillating through the air as VHF waves, zapping down a wire from an aerial, and ultimately driving the deflecting coils of a viewer’s cathode ray tube to paint that image on the phosphorescent screen for a 50th of a second. And then it’s gone forever.

For a probe on the far side of the moon, with 74 million million million tonnes of rock between it and the earthbound receiving station, live transmission is not an option. The image must somehow be captured and stored.

Video tape recorders have been invented by 1959, but the machines are enormous and expensive. At the BBC, most non-live programmes are still recorded by pointing a film camera at a live TV monitor.

And it is film that will make Luna 3’s mission possible. Enemy film in fact, which the USSR recovered, unexposed, from a CIA spy balloon. Resistant to radiation and extremes of temperature, the 35mm isochromatic stock is chosen by Soviet scientists to be loaded into Luna 3’s AFA-Ye1 camera, part of its Yenisey-2 imaging system.

Luna 3 launches on October 4th, 1959 from Baikonur Cosmodrome in what will one day be Kazakhstan. A modified R-7 rocket inserts the probe into a highly elliptical Earth orbit which, after some over-heating and communications issues are resolved, brings it within range of the moon three days later.

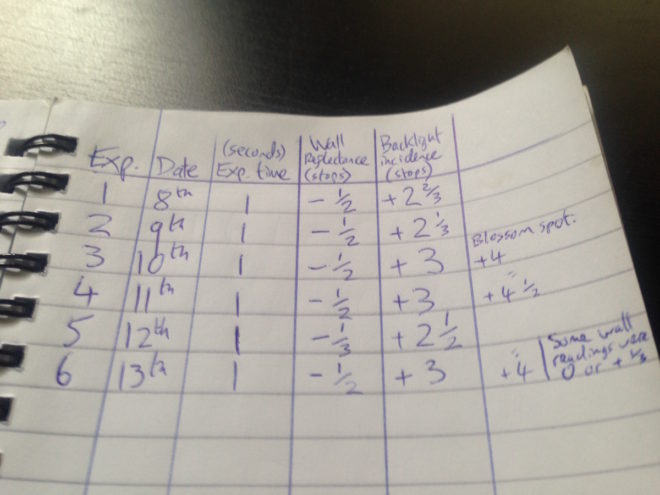

The mission has been timed so that the far side of the moon is in sunlight when Luna 3 reaches it. A pioneering three-axis stabilisation system points the craft (and thus the camera, which cannot pan independently) at the side of the moon which no-one has seen before. A photocell detects the bright surface and triggers the Yenisey-2 system. Alternating between 200mm f/5.6 and 500mm f/9.5 lenses, the camera exposes 29 photographs on the ex-CIA film.

Next that film must be processed, and Luna 3 can’t exactly drop it off at Snappy Snaps. In fact, the Yenisey-2 system contains a fully automated photo lab which develops, fixes and dries the film, all inside a 1.3x1m cylinder tumbling through the vacuum of space at thousands of miles per hour.

Now what? Returning a spacecraft safely to Earth is beyond human ability in 1959, though the following year’s Vostok missions will change all that. Once Luna 3 has swung around the moon and has line of sight to the receiving stations on Earth, the photographic negatives must be converted to radio broadcasts.

To that end, Yenisey-2 incorporates a cathode ray tube which projects a beam of light through the negative, scanning it at a 1,000-line resolution. A photocell on the other side receives the beam, producing a voltage inversely proportional to the density of the negative. This voltage frequency-modulates a radio signal in the same way that fax machines use frequency-modulated audio to send images along phone lines.

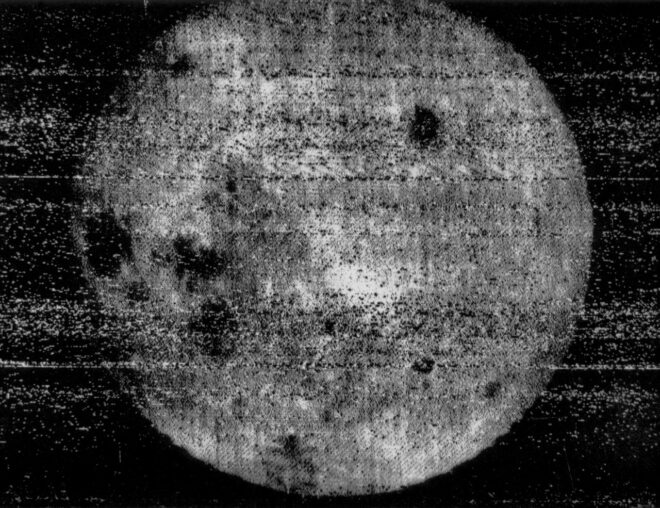

Attempts to transmit the photographs begin on October 8th, and after several failures, 17 images are eventually reconstructed by the receiving stations in Crimea and Kamchatka. They are noisy, they are blocky, they are monochromatic, but they show a sight that has been hidden from human eyes since the dawn of time. Featuring many more craters and mountains and many fewer “seas” than the side we’re used to, Luna 3’s pictures prompt a complete rethink of the moon’s history.

Its mission accomplished, the probe spirals in a decaying orbit until it finally burns up in Earth’s atmosphere. In 1961, Yuri Gagarin’s historic flight will capture the public imagination, and unmanned space missions will suddenly seem much less interesting.

But next time you effortlessly WhatsApp a photo to a friend, spare a thought for the remarkable engineering that one day sent never-before-seen photographs across the gulf of space without the aid of digital imaging.

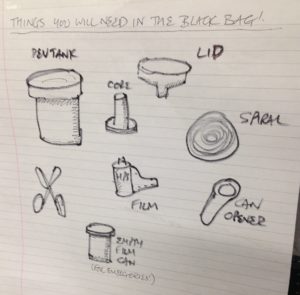

To make the whole thing a little more fun and primitive, I decided to shoot using

To make the whole thing a little more fun and primitive, I decided to shoot using