Recently I’ve been pondering which camera to shoot an upcoming project on, so I consulted the ASC’s comparison chart. Amongst the many specs compared is dynamic range, and I noticed that the ARRI Alexa’s was given as 14+ stops, while the Blackmagic URSA’s is 15. Having used both cameras a fair bit, I can tell you that there’s no way in Hell that the Ursa has a higher dynamic range than the Alexa. So what’s going on here?

What is dynamic range?

To put it simply, dynamic range is the level of contrast that an imaging system can handle. To quote Alan Roberts, who we’ll come back to later:

This is normally calculated as the ratio of the exposure which just causes white clipping to the exposure level below which no details can be seen.

A photosite on a digital camera’s sensor outputs a voltage proportional to the amount of light hitting it, but at some point the voltage reaches a maximum, and no matter how much more light you add, it won’t change. At the other end of the scale, a photosite may receive so little light that it outputs no voltage, or at least nothing that’s discernible from the inherent electronic noise in the system. These upper and lower limits of brightness may be narrowed by image processing within the camera, with RAW recording usually retaining the full dynamic range, while linear Rec. 709 severely curtails it.

In photography and cinematography, we measure dynamic range in stops – doublings and halvings of light which I explain fully in this article. One stop is a ratio of 2:1, five stops are 32:1, thirteen stops are almost 10,000:1

It’s worth pausing here to point out the difference between dynamic range and latitude, a term which is sometimes regarded as synonymous, but it’s not. The latitude is a measure of how much the camera can be over- or under-exposed without losing any detail, and is dependent on both the dynamic range of the camera and the dynamic range of the scene. (A low-contrast scene will allow more latitude for incorrect exposure than a high-contrast scene.)

Problems of Measurement

Before digital cinema cameras were developed, video had a dynamic range of about seven stops. You could measure this relatively easily by shooting a greyscale chart and observing the waveform of the recorded image to see where the highlights levelled off and the shadows disappeared into the noise floor. With today’s dynamic ranges into double digits, simple charts are no longer practical, because you can’t manufacture white enough paper or black enough ink.

For his excellent video on dynamic range, Filmmaker IQ’s John Hess built a device fitted with a row of 1W LEDs, using layers of neutral density gel to make each one a stop darker than its neighbour. For the purposes of his demonstration, this works fine, but as Phil Rhodes points out on RedShark News, you start running into the issue of the dynamic range of the lens.

It may seem strange to think that a lens has dynamic range, and in the past when I’ve heard other DPs talk about certain glass being more or less contrasty, I admit that I haven’t thought much about what that means. What it means is flare, and not the good anamorphic streak kind, but the general veiling whereby a strong light shining into the lens will raise the overall brightness of the image as it bounces around the different elements. This lifts the shadows, producing a certain amount of milkiness. Even with high contrast lenses, ones which are less prone to veiling, the brightest light on your test device will cause some glare over the darkest one, when measuring the kind of dynamic range today’s cameras enjoy.

It may seem strange to think that a lens has dynamic range, and in the past when I’ve heard other DPs talk about certain glass being more or less contrasty, I admit that I haven’t thought much about what that means. What it means is flare, and not the good anamorphic streak kind, but the general veiling whereby a strong light shining into the lens will raise the overall brightness of the image as it bounces around the different elements. This lifts the shadows, producing a certain amount of milkiness. Even with high contrast lenses, ones which are less prone to veiling, the brightest light on your test device will cause some glare over the darkest one, when measuring the kind of dynamic range today’s cameras enjoy.

Manufacturer Measurements

Going back to my original query about the Alexa versus the URSA, let’s see exactly what the manufacturers say. ARRI specifically states that its sensor’s dynamic range is over 14 stops “as measured with the ARRI Dynamic Range Test Chart”. So what is this chart and how does it work? The official sales blurb runs thusly:

The ARRI DRTC-1 is a special test chart and analysis software for measurement of dynamic range and sensitivity of digital cameras. Through a unique stray light reduction concept this system is able to accurately measure up to 15.5 stops of dynamic range.

The “stray light reduction” is presumably to reduce the veiling mentioned earlier and provide more accurate results. This could be as simple as covering or turning off the brighter lights when measuring the dimmer ones.

I found a bit more information about the test chart in a 2011 camera shoot-out video, from that momentous time when digital was supplanting film as the cinematic acquisition format of choice. Rather than John Hess’s ND gel technique, the DRTC-1 opts for something else to regulate its light output, as ARRI’s Michael Bravin explains in the video:

There’s a piece of motion picture film behind it that’s checked with a densitometer, and what you do is you set the exposure for your camera, and where you lose detail in the vertical and horizontal lines is your clipping point, and where you lose detail because of noise in the shadow areas is your lowest exposure… and in between you end up finding the number of stops of dynamic range.

Blackmagic Design do not state how they measure the dynamic range of their cameras, but it may be a DSC Labs Xlya. This illuminated chart boasts a shutter system which “allows users to isolate and evaluate individual steps”, plus a “stepped xylophone shape” to minimise flare problems.

Art Adams, a cinema lens specialist at ARRI, and someone who’s frequently quoted in Blain Brown’s Cinematography: Theory & Practice, told Y.M. Cinema Magazine:

I used to do a lot of consulting with DSC Labs, who make camera test charts, so I own a 20-stop dynamic range chart (DSC Labs Xyla). This is what most manufacturers use to test dynamic range (although not ARRI, because our engineers don’t feel it’s precise enough) and I see what companies claim as usable stops. You can see that they are just barely above the noise floor.

Conclusions

Obviously these ARRI folks I keep quoting may be biased. I wanted to find an independent test that measures both Blackmagics and Alexas with the same conditions and methodology, but I couldn’t find one. There is plenty of anecdotal evidence that Alexas have a bigger dynamic range, in fact that’s widely accepted as fact, but quantifying the difference is harder. The most solid thing I could find is this, from a 2017 article about the Blackmagic Ursa Mini 4.6K (first generation):

The camera was measured at just over 14 stops of dynamic range in RAW 4:1 [and 13 stops in ProRes]. This is a good result, especially considering the price of the camera. To put this into perspective Alan measured the Canon C300 mkII at 15 stops of dynamic range. Both the URSA Mini 4.6 and C300 mkII are bettered by the ARRI Alexa and Amira, but then that comes as no surprise given their reputation and price.

The Alan mentioned is Alan Roberts, something of a legend when it comes to testing cameras. It is interesting to note that he is one of the key players behind the TLCI (Television Lighting Consistency Index), a mooted replacement for CRI (Colour Rendering Index). It’s interesting because this whole dynamic range business is starting to remind me of my investigation into CRI, and is leading me to a similar conclusion, that the numbers which the manufacturers give you are all but useless in real-world cinematography.

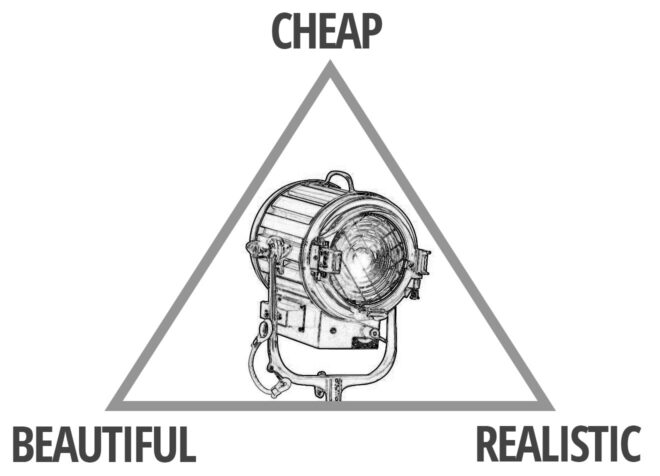

Whereas CRI at least has a standardised test, there’s no such thing for dynamic range. Therefore, until there is more transparency from manufacturers about how they measure it, I’d recommend ignoring their published values. As always when choosing a camera, shoot your own tests if at all possible. Even the most reliable numbers can’t tell you whether you’re going to like a camera’s look or not, or whether it’s right for the story you want to tell.

When tests aren’t possible, and I know that’s often the case in low-budget land, at least try to find an independent comparison. I’ll leave you with this video from the Slanted Lens, which compares the URSA Mini Pro G2 with the ARRI Amira (which uses the same Alev III sensor as the Alexa). They don’t measure the dynamic range, but you can at least see the images side by side, and in the end it’s the images that matter, not the numbers.