At Christmas 1978, when Superman: The Movie opened to enthusiastic reviews and record-breaking box office, it was no surprise that a sequel was in the works. What was unusual was that the majority of that sequel had already been filmed, and stranger still, much of it would be re-filmed before Superman II hit cinemas two years later.

At Christmas 1978, when Superman: The Movie opened to enthusiastic reviews and record-breaking box office, it was no surprise that a sequel was in the works. What was unusual was that the majority of that sequel had already been filmed, and stranger still, much of it would be re-filmed before Superman II hit cinemas two years later.

Jerry Siegel and Joe Shuster’s comic-book icon had made several superhuman leaps to the screen by the 1970s, but Superman: The Movie was the first big-budget feature film. Producer Pierre Spengler and executive producer father/son team Alexander and Ilya Salkind purchased the rights from DC Comics in 1974 and made a deal to finance not one but two Superman movies on the understanding that Warner Bros. would buy the finished products. Salkind senior had unintentionally pioneered back-to-back shooting the previous year when he decided to split The Three Musketeers – originally intended as a three-hour epic – into two shorter films.

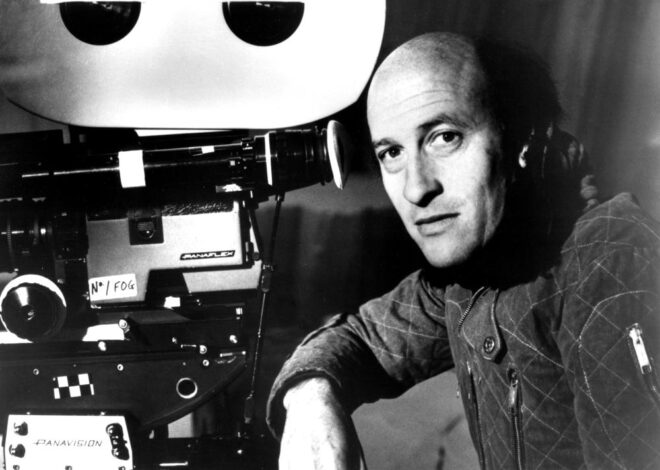

After packaging Superman I and II with A-listers Marlon Brando (as Kryptonian patriarch Jor-El) and Gene Hackman (as the villainous Lex Luthor), the producers hired The Omen director Richard Donner to helm the massive production. Donner cast the unknown Christopher Reeve in the title role, while John Williams was signed to compose what would prove to be one of the most famous soundtracks in cinematic history. Like many big genre productions of the time – Star Wars and Alien to name but two – Superman set up camp in England, with cameras rolling for the first time on March 24th, 1977.

“We were shooting scenes from the two films simultaneously, according to production conveniences,” explained creative consultant Tom Mankiewicz in a 2001 documentary. “So when we had Gene Hackman we were shooting scenes from II and scenes from I, or when we were in the Daily Planet we were shooting scenes from both pictures in the Daily Planet, while you were in that set.”

Today – largely thanks to Peter Jackson’s Lord of the Rings trilogy – we are used to enormous, multi-year productions with crew numbers in four figures, but the scale of the dual Superman shoot was unprecedented at the time, eventually reaching nineteen months in duration. It was originally scheduled for eight.

“Dick [Donner] never in the course of the picture got a budget; he never got a schedule,” claimed Mankiewicz. “He was constantly told that he was over schedule, way over budget, but nobody told him what that budget was or how much he was over that budget.”

Given that overspends were funded by Warner Bros. in return for more distribution rights, Spengler and the Salkinds were watching the value of their huge investment trickle away. So despite Donner’s popularity with the rest of the cast and crew, his relationship with the producers became ever more strained, to the point where they weren’t even on speaking terms.

Ilya Salkind suggested bringing in The Three Musketeers director Richard Lester, who agreed on condition that he would be paid monies still owed to him from that earlier film. By some accounts his role on Superman was that of a mediator between the director and the producers, by others he was a co-producer, second unit director or even a back-up director in case Donner cracked under the pressure of the endless shoot. “Where does this leave… Donner?” asked a newspaper report of the time. “‘Nervous,’ a cast member says.”

Eventually, with the first movie’s release date looming, the filmmakers decided on a change of plan. Superman II would be placed on the back burner in order to prioritise finishing Superman: The Movie – and get it earning money as quickly as possible. At this point, three quarters of the sequel was already in the can, including all scenes featuring Brando and Hackman, both of whom had had contractual wrap dates to meet.

Superman: The Movie was a hit, but Donner would not direct the remainder of its sequel. “They have to want me to do it,” he said of the producers at the time. “It has to be on my terms and I don’t mean financially, I mean control.” Of Spengler specifically, Donner was reported to bluntly state, “If he’s on it – I’m not.”

And indeed Donner was not. The Salkinds had no intention of acceding to his demands. Instead, the former mediator Richard Lester was hired to complete Superman II, and Donner received a telegram telling him that his services were no longer required. “I was ready to get on an airplane and kill,” he recalled years later, “because they were taking my baby away from me.”

Meanwhile Brando was trying (unsuccessfully) to sue the producers over royalties, and demanded a significant cut of the box office gross from the sequel. Rather than pay this, the producers elected to re-film his scenes, replacing Jor-El with Superman’s mother Lara, as played by Susannah York.

It was far from the only reshooting of Superman II footage that took place. Ironically, given the earlier budget concerns, Lester was permitted to redo large chunks of Donner’s material with a rewritten script in order to earn a credit as director under guild rules. Major changes included a new opening sequence on the Eiffel Tower, Lois Lane’s realisation of Clark Kent’s true identity after he trips and falls into a fireplace, and a different ending in which a magic kiss from Clark erases that realisation from her memory.

Some of the reshoots included Lex Luthor material, but Hackman declined to return out of loyalty to Donner; the result is the fairly obvious use of a double in the climactic Fortress of Solitude scene. The deaths of Geoffrey Unsworth and John Barry, plus creative differences between Lester and John Williams, meant that the sequel team also featured a new DP (Robert Paynter), production designer (Peter Murton) and composer (Ken Thorne) respectively, although significant contributions from all of the original HODs remain in the finished film.

Some of the reshoots included Lex Luthor material, but Hackman declined to return out of loyalty to Donner; the result is the fairly obvious use of a double in the climactic Fortress of Solitude scene. The deaths of Geoffrey Unsworth and John Barry, plus creative differences between Lester and John Williams, meant that the sequel team also featured a new DP (Robert Paynter), production designer (Peter Murton) and composer (Ken Thorne) respectively, although significant contributions from all of the original HODs remain in the finished film.

Comparing his own directing style with Donner’s, Lester told interviewers, “I think that Donner was emphasising a kind of grandiose myth… There was a type of epic quality which isn’t in my nature… I’m more quirky and I play around with slightly more unexpected silliness.” Indeed his material is characterised by visual gags and a generally less serious approach, which he would continue into Superman III (1983).

Although some of the unused Donner scenes were incorporated into TV screenings over the years, it was not until the 2001 DVD restoration of the first movie that interest began to build in a release for the full, unseen version of the sequel. When Brando’s footage was rediscovered a few years later, it could finally become a reality.

“I don’t think there is [another] film that had so much footage shot and not used,” remarked editor Michael Thau. A vast cataloguing and restoration effort was undertaken to make useable the footage which had been sitting in Technicolor’s London vault for a quarter of a century. Donner and Mankiewicz returned to oversee and approve the process, which used only the minimum of Lester material necessary to tell a complete story, plus footage from Reeve’s and Margot Kidder’s 35mm screen tests.

Released on DVD in 2006, the Donner Cut suffers from the odd cheap visual effect used to plug plot holes, and a familiar turning-back-time ending which was originally scripted for the sequel but moved to the first film at the last minute. However, for fans of Superman: The Movie, this version of Superman II is much closer in tone and ties in much better in story terms too. The Donner Cut is also less silly than the theatrical version, though it must be said that Lester’s humour contributed in no small part to the sequel’s original success.

Whichever version you prefer, 40 years on from its first release, Superman II is still a fun and thrilling adventure with impressive visuals and an utterly believable central performance from the late, great Christopher Reeve.