“Why are things so dimly lit today? Can barely see anything.” Such was a comment on a frame of my cinematography that I posted on Instagram last year. It was a night scene but far from the darkest image I’ve ever posted.

I remembered the comment recently when double Oscar-winning cinematographer Janusz Kamiński said something similar in an interview with British Cinematographer. He lamented what he perceives as a loss of lighting skills that accompanied the transition from celluloid to digital filmmaking: “Now everyone shoots dark… Pictures are so murky you need to crank up the TV to see it… They just don’t know how to light.”

I think there’s a tremendous amount of talent in today’s world of digital cinematography, but the technology might have encouraged a trend towards darker images. With celluloid it was always better to err on the side of over-exposure, as highlights would fall off attractively but shadows could get lost in the grain. With digital it is more advisable to lean towards under-exposure, to avoid the harsh clipping of highlights.

We should also consider that modern digital cameras have more dynamic range than film, so there is less risk inherent in under-exposing a scene, especially as you can see on your histogram exactly what detail you’re retaining. But the same should be true of over-exposure too.

The demand from streaming platforms for HDR delivery also encourages DPs and colourists to play more with very dark (or very bright) images. Most viewers will still see the results in SDR, however, and some crucial information at the edges of the dynamic range could get lost in the transfer.

The trend for darker images may have started even before the digital revolution though. “I think contemporary photography is going away from pretty pictures,” Dariusz Wolski told American Cinematographer in 1996, well over a decade before digital capture became the norm. “Something that is dark is really dark, and something that is bright is very bright. The idea is to stretch photography, to make it more extreme.”

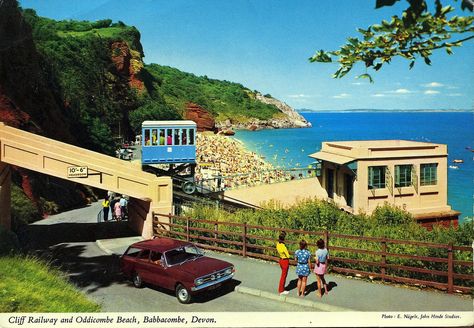

Wolski may have been onto something there: a trend towards more naturalistic images. You have only to look at a film made in the first half of the 20th century to see that lighting has become much more realistic and less stylised since then. Darker doesn’t necessarily mean more realistic, but perhaps it has become a convenient trick to suggest realism, much like blue lighting is a convenient trick to suggest night that has very little basis in how things look in the real world.

The most noticeable increase in darker images has been in TV – traditionally bright and flat because of the inherently contrasty nature of the cathode ray tube and the many lights and reflections contaminating the screen in a typical living room. Flat-screens are less reflective, less contrasty and generally bigger – and a dimmer image is easier for the eye to interpret when it’s bigger.

Perhaps people are more likely to draw the curtains or turn off the lights if they’ve splashed out on a TV so large that it feels a bit like a cinema, but what about all the mobile devices we have today? I went through a phase of watching a lot of Netflix shows on an iPad Mini on trains, and I was forever trying to keep the daylight off the screen so that I could see what was going on. It was annoying, but it was my own fault for watching it in a form that the programme-makers couldn’t reasonably be expected to cater for.

“A lot of people… watch it on small iPads, which in no way can do justice to a show like that anyway,” said DP Fabian Wagner in defence of the infamously dark Battle of Winterfell in Game of Thrones. I’ve never seen it, and I’m all for a DP’s right to shoot an image the way they see fit, but it sounds like he might have gone too far in this case. After all, surely any technique that distracts the audience or takes them out of the story has defeated its purpose.

So, the odd extreme case like this aside, is modern cinematography too dark? I think there is an over-reliance on moodiness sometimes, a bit like how early DSLR filmmakers were too reliant on a tiny depth of field. DPs today have so much choice in all aspects of crafting an image; it is a shame to discount the option of a bright frame, which can be just as expressive as a dark one.

But if a DP wants to choose darkness, that is up to them. Risks like Fabian Wagner took are an important part of any art-form. Without them, cinematography would go stale. And I for one would certainly not want that, the odd negative Instagram comment notwithstanding.

I joined this social media platform last summer, after hearing DP Ed Moore say in

I joined this social media platform last summer, after hearing DP Ed Moore say in